How to implement and customize Business Central's built-in archiving system.

Yo! So for today, I will make some changes on how I do blog things, to use a free form of writing. Let's start with our story about How Exactly this Codeunit Data Archive (600) works

Imagine you have a filing cabinet full of old customer records. Your boss says "Delete everything older than 5 years to save space." But what if the auditors come next year and ask for those records? You need them gone, but also kept!

That's exactly what Data Archive does. It's like taking a photograph of your records before shredding them.

The Simple Explanation

Data Archive is a copy machine for database records before you delete them.

Here's what happens:

- You tell it: "I'm about to delete some stuff, start taking pictures"

- You explicitly save the records you want to archive

- Then you delete the records from your table

- Behind the scenes, Data Archive saves a copy as JSON text

- Later, you can open these "photos" to see what was deleted

The key: You archive FIRST, then delete. This gives you full control over what gets archived.

Can You Use It On Custom Tables?

YES! Absolutely! It works on:

- Standard BC tables (Customer, Item, Sales Header, etc.)

- Your custom tables (Table 50100, 50101, etc.)

- Extension tables

- ANY table in Business Central (except system tables)

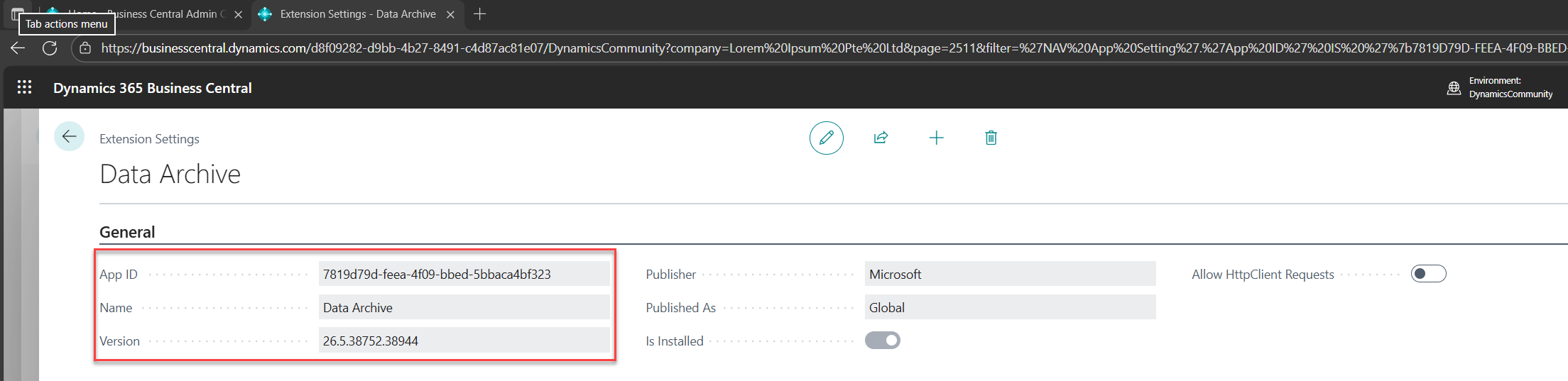

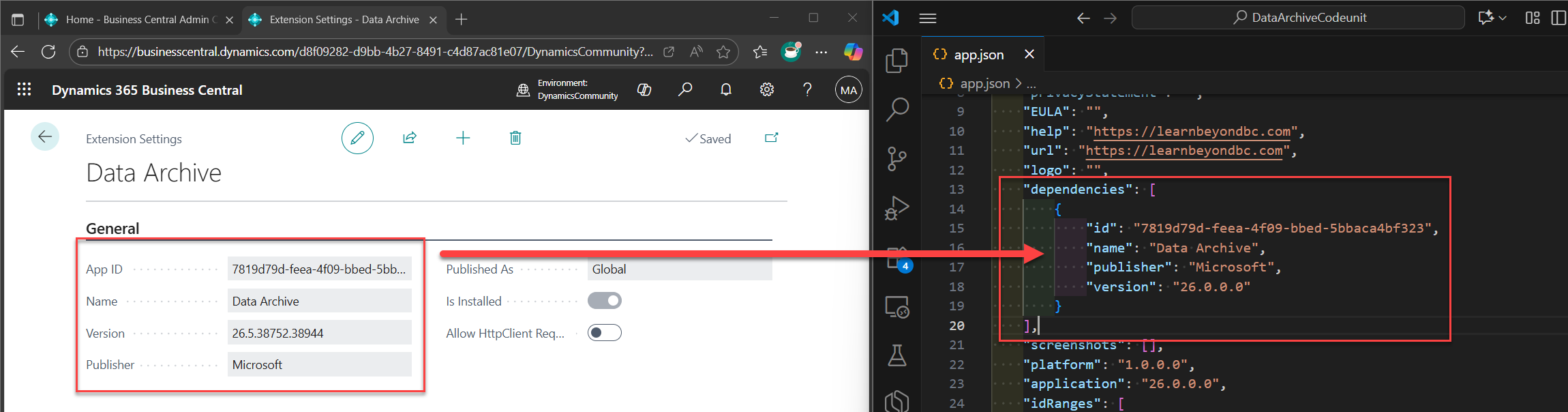

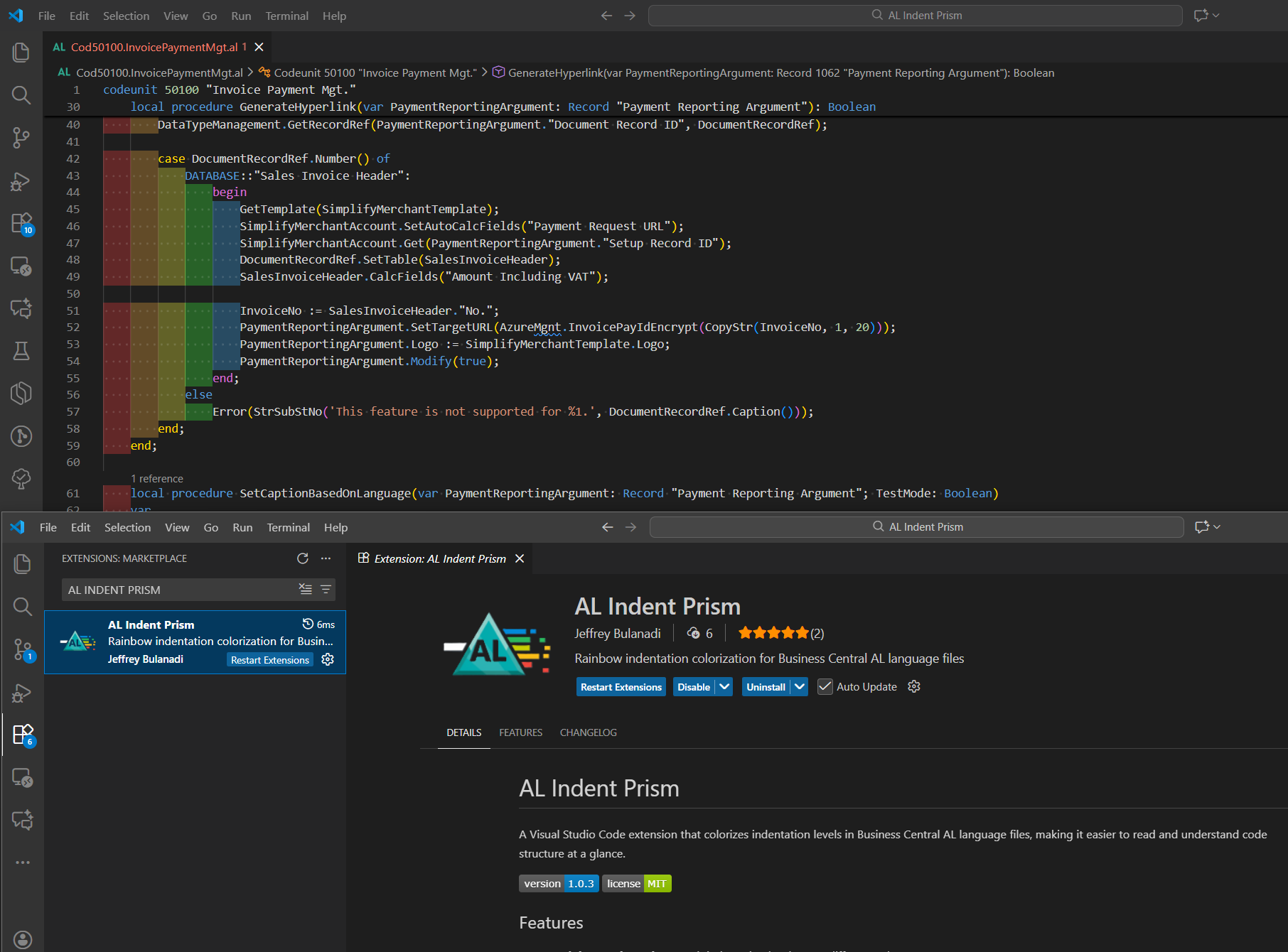

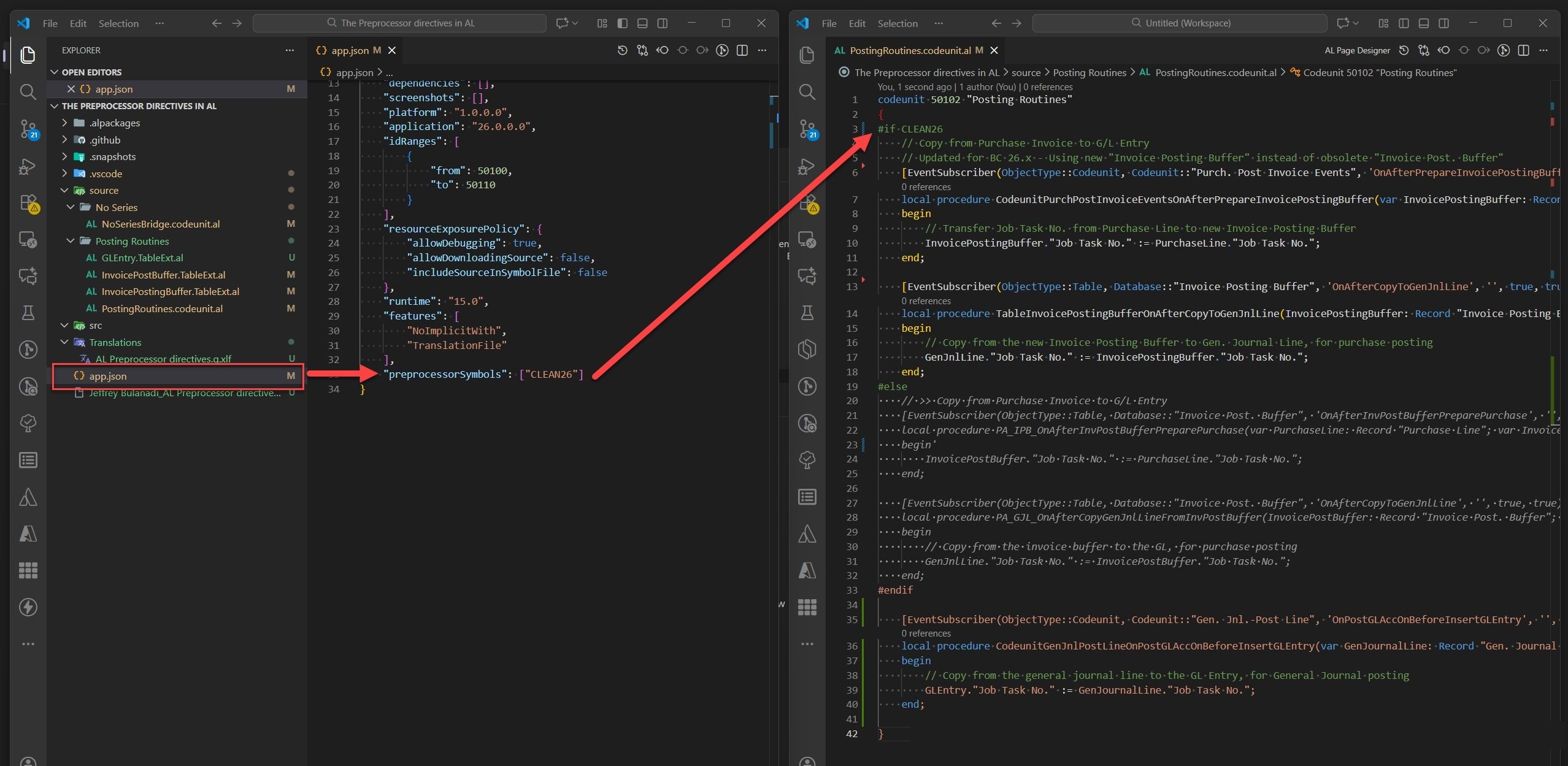

Important: Add Data Archive Dependency

Before you can use Data Archive in your extension, you need to add it as a dependency in your app.json:

{

"id": "your-app-id",

"name": "Your Extension Name",

"publisher": "Your Publisher",

"version": "1.0.0.0",

"dependencies": [

{

"id": "7819d79d-feea-4f09-bbed-5bbaca4bf323",

"name": "Data Archive",

"publisher": "Microsoft",

"version": "26.0.0.0"

}

]

}

Where to find these values:

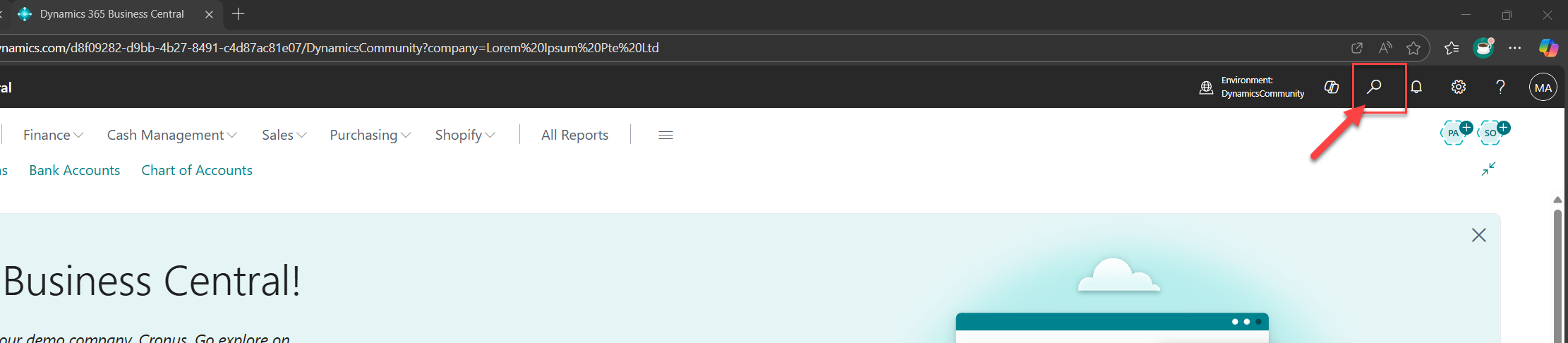

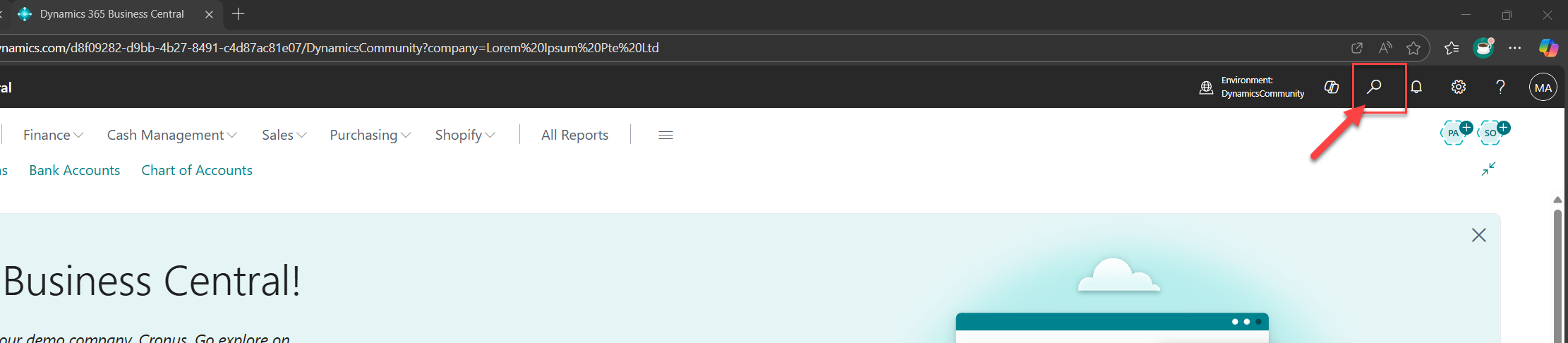

- Launch Business Central and click the Search icon located at the top-right corner of the navigation menu.

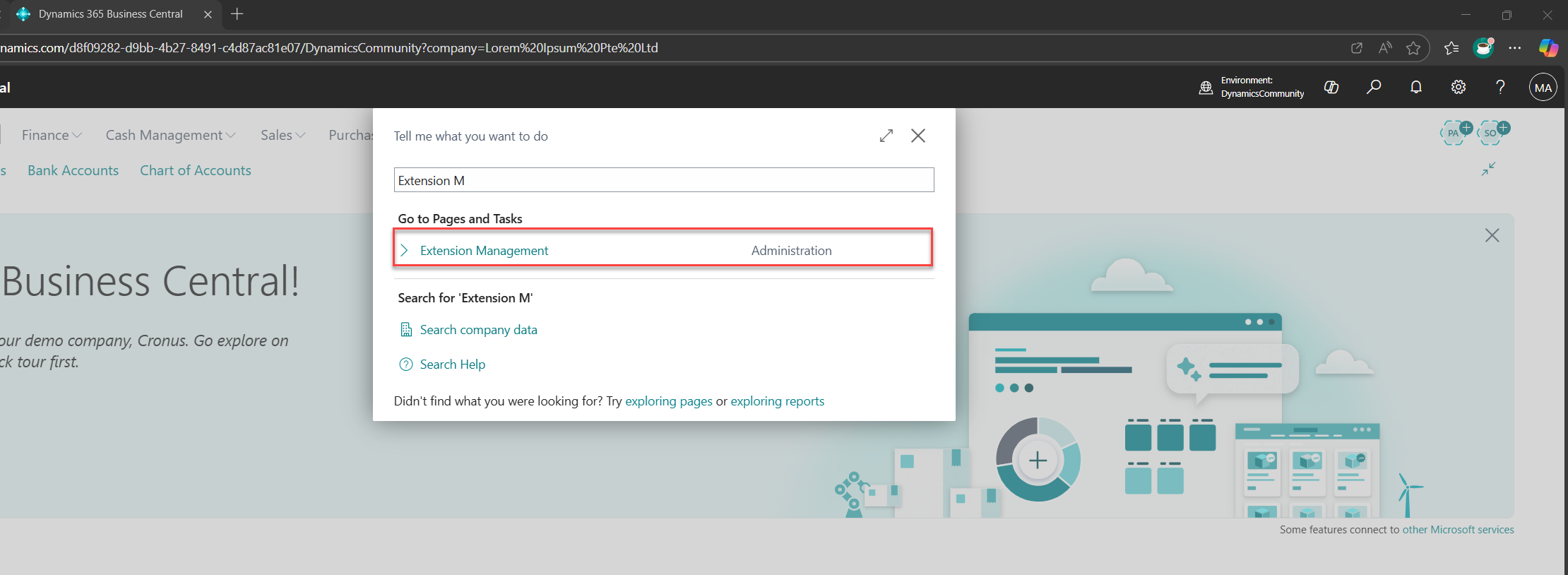

- In the Tell me what you want to do search bar, type and select Extension Management.

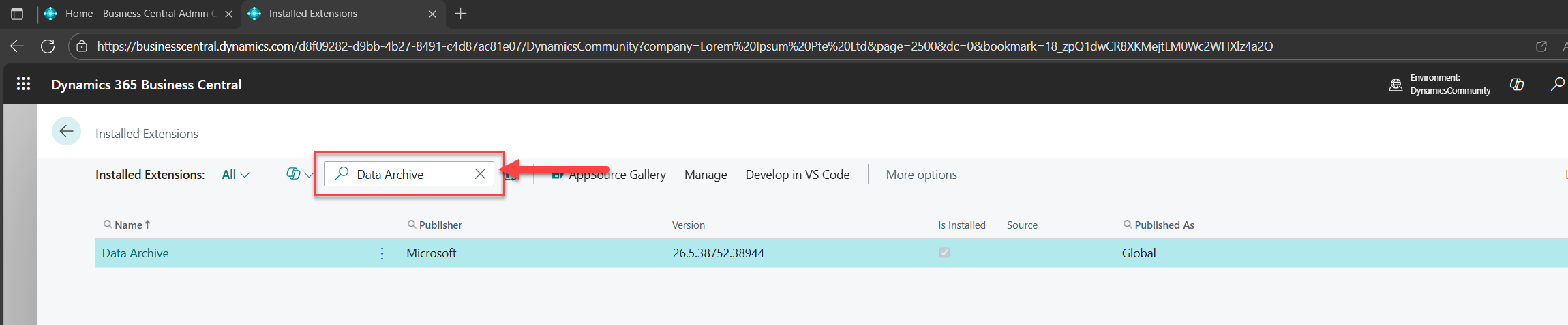

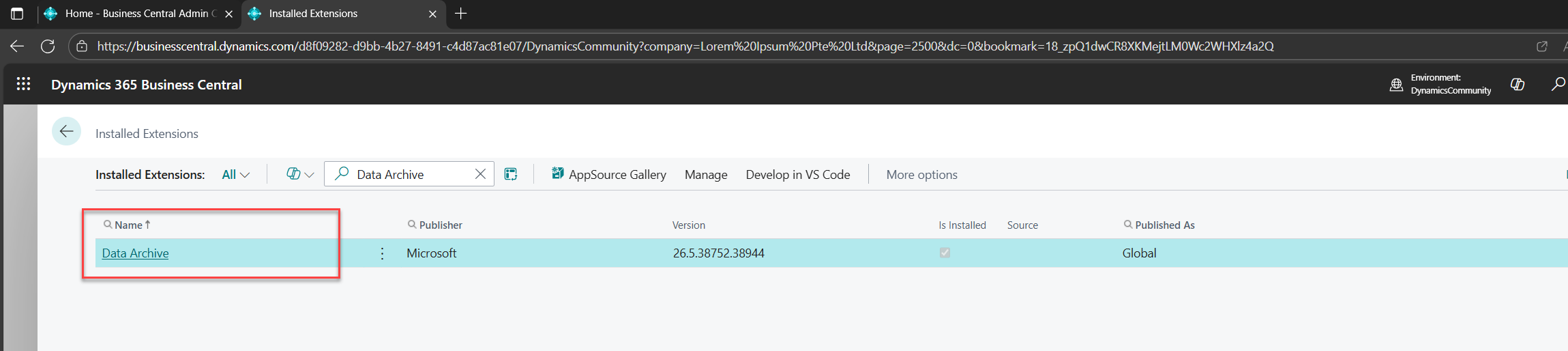

- Search for Data Archive using the search bar located in the top menu of the Extension Management page.

- Click on it to see the App ID and version

- Copy those values to your

app.json

Why this is needed:

- Data Archive is a separate Microsoft extension, not part of the base application

- Your extension needs to reference it to use

Codeunit 600 "Data Archive" - Without this dependency, you'll get compilation errors like: "Codeunit 'Data Archive' is missing"

Once you add this dependency and republish your extension, you're ready to use Data Archive!

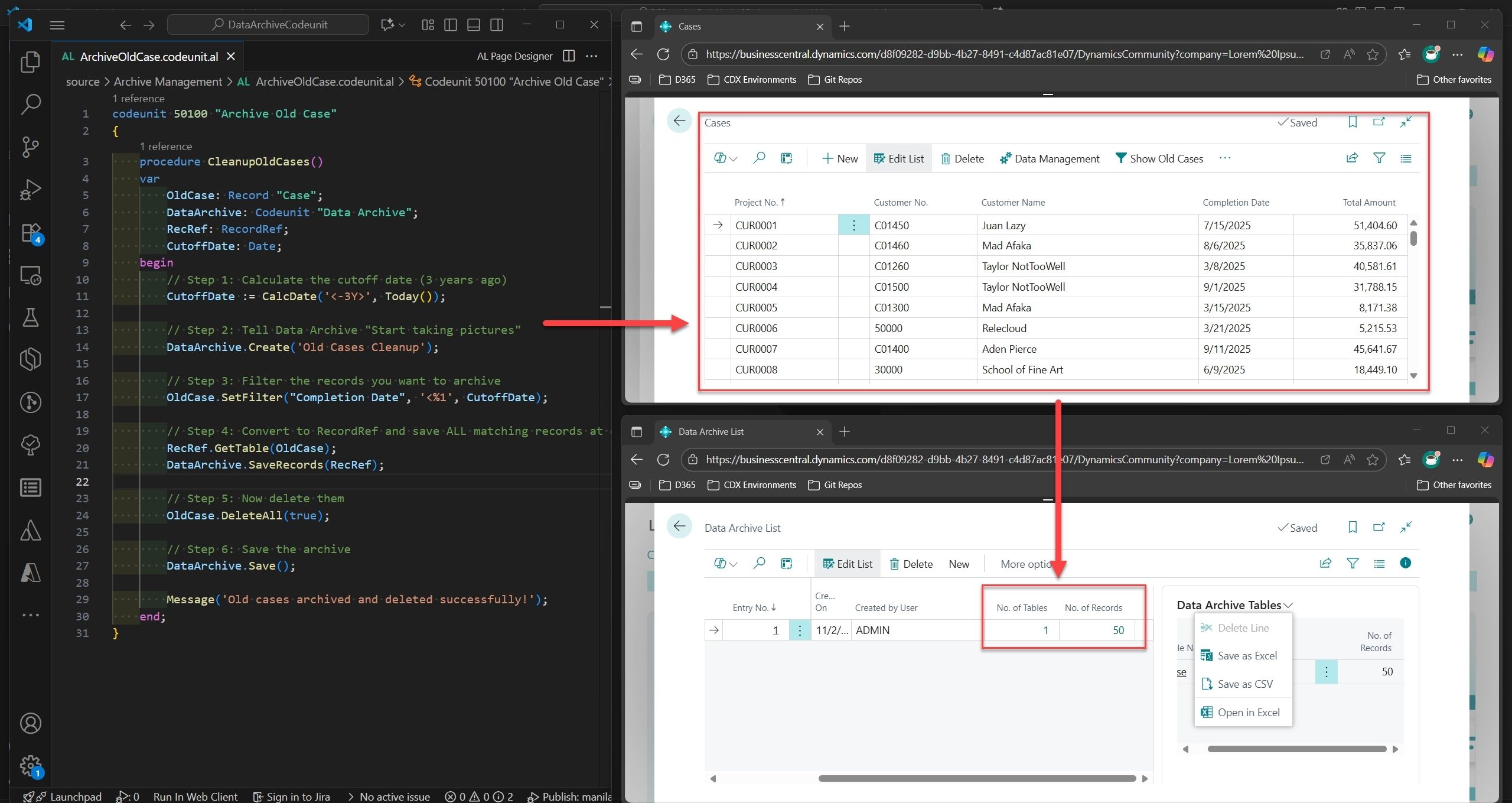

Real World Example: Your Custom "Case" Table

Let's say you have a custom table called "Case" in which contains all the related information of a particular project or a job.

table 50100 "Case"

{

fields

{

field(1; "ID"; Integer) { }

field(2; "Project No."; Code[20]) { TableRelation = Job; }

field(3; "Customer No."; Code[20]) { TableRelation = Customer; }

field(4; "Customer Name"; Text[100]) { }

field(5; "Completion Date"; Date) { }

field(6; "Total Amount"; Decimal) { }

}

keys { key(PK; "Project No.") { Clustered = true; } }

}

You want to delete case older than 3 years but keep a backup. Here's the complete working code:

codeunit 50100 "Archive Old Cases"

{

procedure CleanupOldCases()

var

OldCase: Record "Case";

DataArchive: Codeunit "Data Archive";

RecRef: RecordRef;

CutoffDate: Date;

begin

// Step 1: Calculate the cutoff date (3 years ago)

CutoffDate := CalcDate('<-3Y>', Today());

// Step 2: Tell Data Archive "Start taking pictures"

DataArchive.Create('Old Cases Cleanup');

// Step 3: Filter the records you want to archive

OldCase.SetFilter("Completion Date", '<%1', CutoffDate);

// Step 4: Convert to RecordRef and save ALL matching records at once

RecRef.GetTable(OldCase);

DataArchive.SaveRecords(RecRef);

// Step 5: Now delete them

OldCase.DeleteAll(true);

// Step 6: Save the archive

DataArchive.Save();

Message('Old cases archived and deleted successfully!');

end;

}

That's it! The key is using SaveRecords(RecRef) which archives ALL filtered records in one shot, then delete them. Clean and efficient!

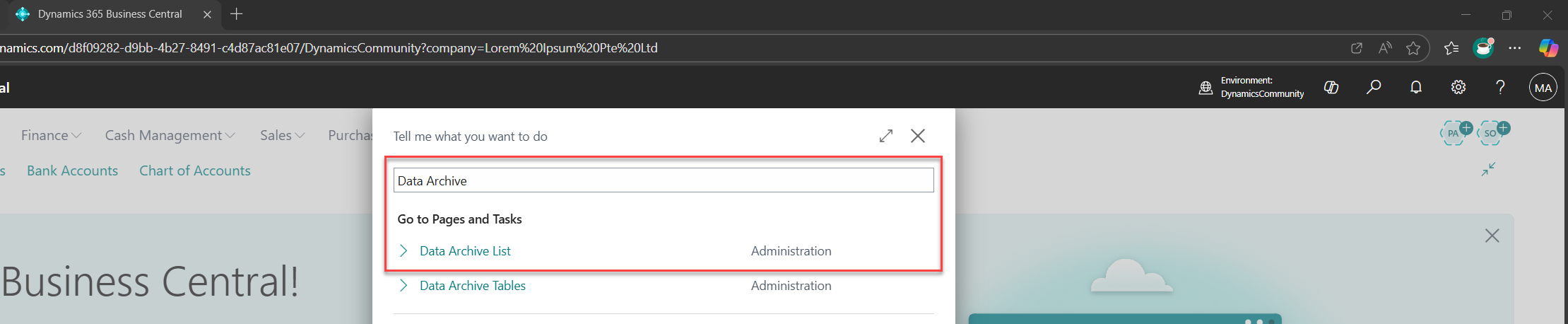

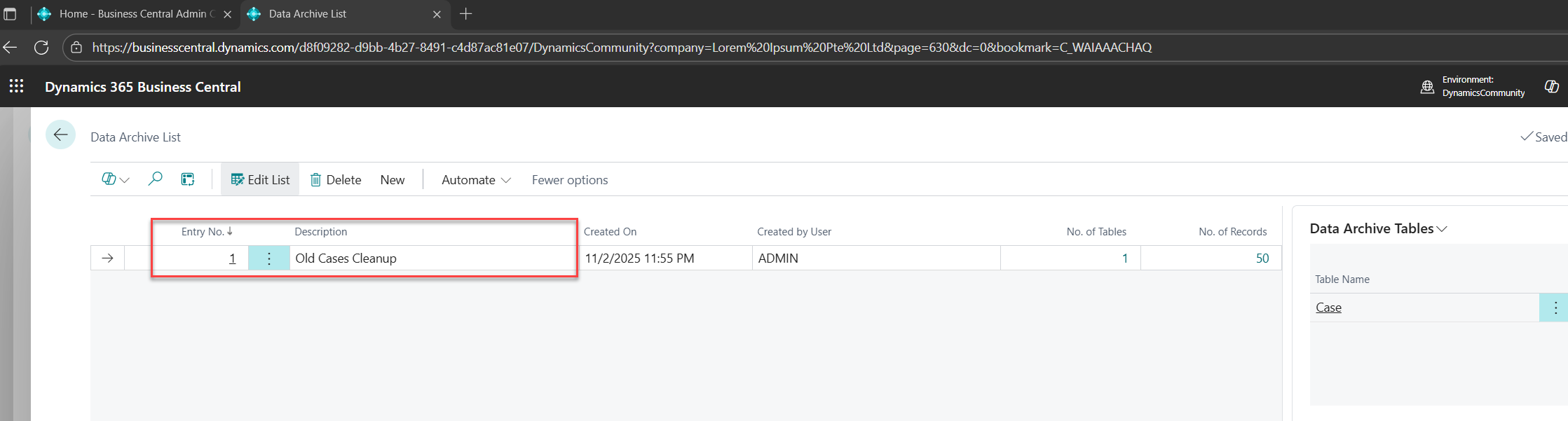

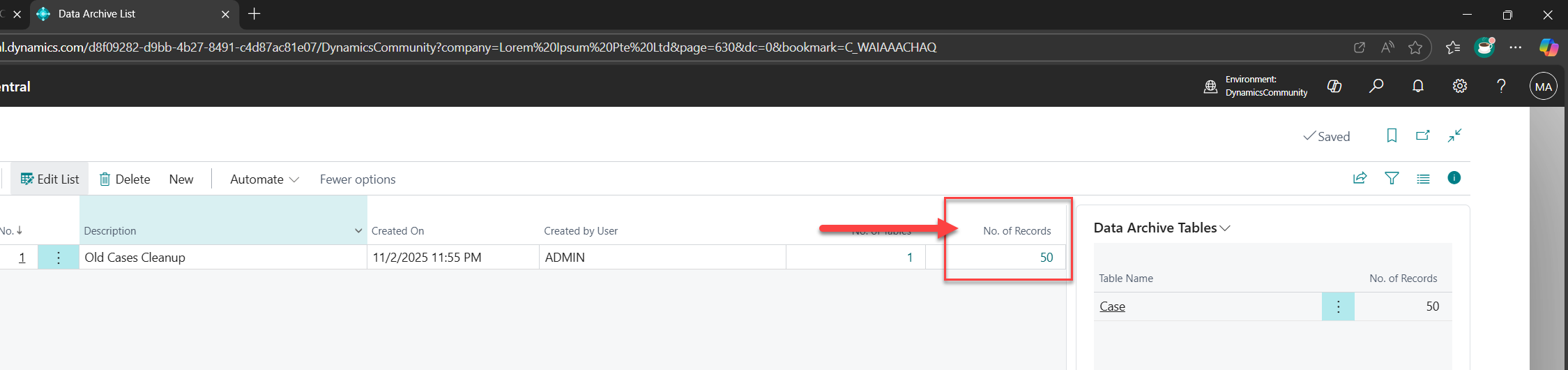

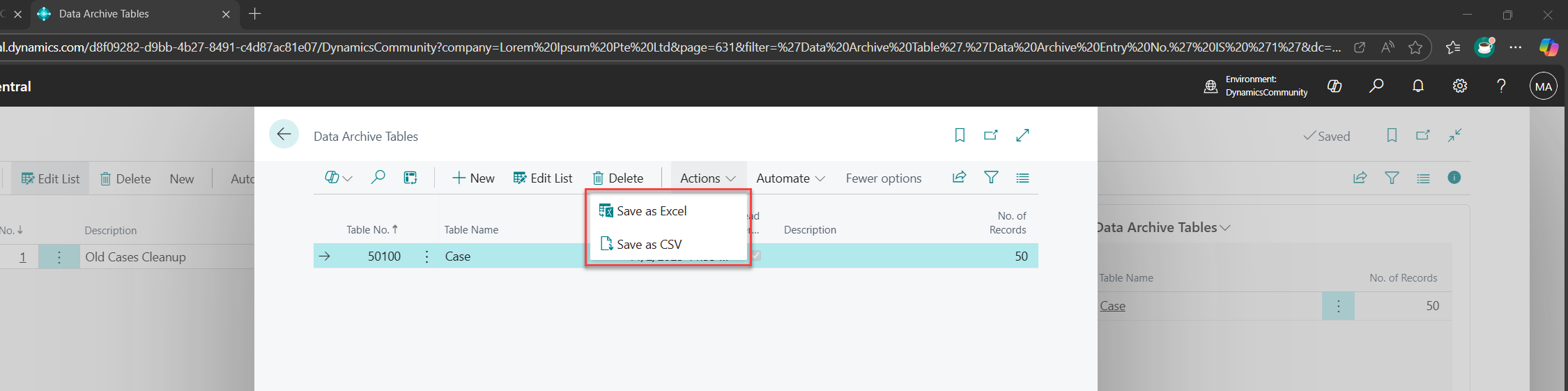

How to See What Was Archived

- Launch Business Central and click the Search icon located at the top-right corner of the navigation menu.

- Search for "Data Archive List" on the Tell me what you want to do search bar

- You'll see your archive: "Old Cases Cleanup"

- Click on it to see all the records that were saved

- Export to Excel or CSV if you need to analyze them

The Key Methods You Need to Know

Think of Data Archive like a video recorder with 6 buttons:

| Method | What It Does | Real-World Analogy | Recommended? |

|---|---|---|---|

Create('Name') |

Start a new archive | Open your camera app | Always use |

SaveRecord(Record) |

Save ONE specific record | Take one photo | For single records |

SaveRecords(RecordRef) |

Save ALL filtered records | Take a burst of photos | BEST for bulk |

Save() |

Finalize and commit the archive | Save photos to gallery | Always use |

StartSubscriptionToDelete() |

Auto-save anything deleted | Motion-detection mode | Advanced, may not work everywhere |

StopSubscriptionToDelete() |

Stop auto-saving | Turn off motion detection | Only if using subscription |

What Gets Saved?

Everything in the record! For your "Case" example:

- ID (auto-increment field)

- Project No.

- Customer No.

- Customer Name

- Completion Date

- Total Amount

- SystemId, SystemCreatedAt, SystemModifiedAt

- All fields (even ones you don't usually see)

It's saved as JSON text that looks like this:

{

"1": "1",

"2": "JOB001",

"3": "C00010",

"4": "Contoso Ltd.",

"5": "2020-05-15",

"6": "50000.00"

}

Where the numbers (1, 2, 3, 4, 5, 6) are the field numbers from your table.

How Does It ACTUALLY Work? (The Detective Story)

Let's trace what happens when you run that code above using the recommended SaveRecords() pattern. I'll explain it like we're debugging step-by-step:

Step 1: You Call DataArchive.Create('Old Cases Cleanup')

What happens:

Your Code → Codeunit 600 "Data Archive" → Codeunit 610 "Implementation" → Codeunit 605 "Provider"

Behind the scenes:

// In Codeunit 605 "Data Archive Provider"

procedure Create(Description: Text): Integer

begin

DataArchive.Init();

DataArchive.Description := 'Old Cases Cleanup';

DataArchive.Insert(true); // Creates a new row in Table 600

CurrentDataArchiveEntryNo := DataArchive."Entry No."; // Gets Entry No. = 1

exit(1); // Returns the entry number

end;

Result: A new row is created in Table 600 "Data Archive":

| Entry No. | Description | No. of Tables | No. of Records |

|---|---|---|---|

| 1 | Old Cases Cleanup | 0 | 0 |

Think of this as creating a new folder on your computer called "Old Cases Cleanup".

Step 2: You Filter and Archive Records with SaveRecords()

What happens: This is where the archiving magic happens - BEFORE you delete anything!

// Your code:

OldCase.SetFilter("Completion Date", '<%1', CutoffDate);

RecRef.GetTable(OldCase);

DataArchive.SaveRecords(RecRef);

Behind the scenes:

1. RecRef.GetTable(OldCase) converts your filtered record set to a RecordRef

2. DataArchive.SaveRecords(RecRef) reads ALL filtered records

3. For EACH record in the filter:

- Read the record data

- Convert record to JSON

- Add it to a cache (temporary storage in memory)

4. Records are NOT deleted yet - they're just copied to cache

Let's say you have 3 cases matching your filter. Here's what happens for each one:

// In Codeunit 605 "Data Archive Provider"

procedure SaveRecord(var RecRef: RecordRef)

begin

// RecRef points to the "Case" record being deleted

SaveRecordsToBuffer(RecRef, false);

end;

local procedure SaveRecordsToBuffer(var RecRef: RecordRef; AllWithinFilter: Boolean)

var

TableJson: JsonArray;

FieldList: List of [Integer];

begin

// 1. Get all field numbers from the table (1, 2, 3, 4, 5, 6)

GetFieldListFromTable(RecRef.Number, FieldList); // Returns [1, 2, 3, 4, 5, 6]

// 2. Convert record to JSON

TableJson.Add(GetRecordJsonFromRecRef(RecRef, FieldList));

// 3. Store in cache (Dictionary in memory)

CachedDataRecords.Add(TableIndex, TableJson);

end;

After deleting 3 cases, the cache looks like:

CachedDataRecords (in memory):

TableIndex 1 (Table 50100 "Case"):

[

{"1": "1", "2": "JOB001", "3": "C00010", "4": "Contoso", "5": "2020-05-15", "6": "50000"},

{"1": "2", "2": "JOB002", "3": "C00020", "4": "Fabrikam", "5": "2019-03-20", "6": "75000"},

{"1": "3", "2": "JOB003", "3": "C00030", "4": "Alpine Ski", "5": "2020-11-30", "6": "120000"}

]

Important: The records are STILL in your "Case" table at this point! They're just copied to cache (RAM).

Step 3: You Delete the Records with DeleteAll(true)

What happens:

// Your code:

OldCase.DeleteAll(true);

Now the actual deletion happens:

1. All 3 records are removed from Table 50100 "Case"

2. The delete operation completes

3. The archived data is still safe in memory (cache)

Think of it like: You made photocopies (Step 2), now you're shredding the originals (Step 3).

Step 4: You Call DataArchive.Save()

What happens: NOW the cached data is written to the database!

// In Codeunit 605

procedure Save()

var

TableNo: Integer;

i: Integer;

begin

// For each table that had deletes (in our case, just Table 50100)

for i := 1 to CachedDataTableList.Count() do begin

CachedDataTableList.Get(i, TableNo); // TableNo = 50100

SaveTable(i, TableNo); // Save the JSON to database

end;

end;

local procedure SaveTable(TableIndex: Integer; TableNo: Integer)

var

DataArchiveTable: Record "Data Archive Table";

jsonArray: JsonArray;

begin

// 1. Create a new row in Table 601

DataArchiveTable.Init();

DataArchiveTable."Data Archive Entry No." := 1; // Links to our archive

DataArchiveTable."Table No." := 50100; // "Case"

DataArchiveTable."Entry No." := 1;

// 3. Insert into database

DataArchiveTable.Insert(true);

// 4. Get JSON from cache

CachedDataRecords.Get(TableIndex, jsonArray);

// 5. Save JSON to BLOB field

DataArchiveTable."Table Data (json)".ImportStream(InStr, 'Case Data');

DataArchiveTable."No. of Records" := 3; // We archived 3 records

// 6. Do a final update

DataArchiveTable.Modify(true);

end;

Result: Table 601 "Data Archive Table" now has:

| Entry No. | Data Archive Entry No. | Table No. | Table Name | No. of Records | Table Data (json) |

|---|---|---|---|---|---|

| 1 | 1 | 50100 | Case | 3 | [BLOB with JSON] |

The JSON BLOB contains all 3 deleted cases!

The Final Database State

After everything is done:

Table 600 "Data Archive":

| Entry No. | Description | No. of Tables | No. of Records |

|---|---|---|---|

| 1 | Old Cases Cleanup | 1 | 3 |

Table 601 "Data Archive Table":

| Entry No. | Archive Entry | Table No. | No. of Records | JSON Data |

|---|---|---|---|---|

| 1 | 1 | 50100 | 3 | {...3 cases...} |

Table 50100 "Case":

| ID | Project No. | Customer No. | Customer Name | Completion Date | Total Amount |

|---|---|---|---|---|---|

| (empty - records deleted!) | |||||

Visual Timeline: What Happens When

TIME →

0ms: DataArchive.Create('Old Cases Cleanup')

└─> Table 600: Insert Entry No. 1 ✓

5ms: OldCase.SetFilter("Completion Date", '<%1', CutoffDate)

└─> Filter applied to recordset ✓

10ms: RecRef.GetTable(OldCase)

└─> Convert to RecordRef ✓

15ms: DataArchive.SaveRecords(RecRef) ← ARCHIVE HAPPENS HERE

├─> Read Record 1 (ID: 1, Project: JOB001)

│ └─> Convert to JSON

│ └─> Saves to cache: {"1": "1", "2": "JOB001", "3": "C00010", ...}

│

├─> Read Record 2 (ID: 2, Project: JOB002)

│ └─> Convert to JSON

│ └─> Saves to cache: {"1": "2", "2": "JOB002", "3": "C00020", ...}

│

└─> Read Record 3 (ID: 3, Project: JOB003)

└─> Convert to JSON

└─> Saves to cache: {"1": "3", "2": "JOB003", "3": "C00030", ...}

40ms: OldCase.DeleteAll(true) ← NOW DELETE

└─> All 3 records deleted from table ✓

45ms: DataArchive.Save() ← COMMIT TO DATABASE

└─> Write cache to Table 601 ✓

└─> Table 601: Insert Entry No. 1 with JSON ✓

50ms: Message('Old cases archived and deleted successfully!')

└─> User notification ✓

55ms: DONE! ✓

The Three Tables Explained

Table 600 "Data Archive" (The Header)

Purpose: Keeps track of each archive session

Think of it like: A folder name

table 600 "Data Archive"

{

fields

{

field(1; "Entry No."; Integer) { } // Unique ID for this archive

field(6; "Description"; Text[80]) { } // Your description

field(7; "No. of Tables"; Integer) { } // How many tables archived

field(8; "No. of Records"; Integer) { } // Total records archived

}

}

Table 601 "Data Archive Table" (The Data)

Purpose: Stores the actual JSON data for each table

Think of it like: A ZIP file inside the folder

table 601 "Data Archive Table"

{

fields

{

field(1; "Data Archive Entry No."; Integer) { } // Links to Table 600

field(2; "Table No."; Integer) { } // Which table (50100, 18, 36, etc.)

field(3; "Entry No."; Integer) { } // Unique ID for this entry

field(10; "Table Data (json)"; Media) { } // THE JSON DATA!

field(11; "Table Fields (json)"; Media) { } // Field definitions

field(20; "No. of Records"; Integer) { } // Count of records in JSON

}

}

Table 602 "Data Archive Media Field" (For Images/Blobs)

Purpose: Handles special fields like pictures, PDFs, etc.

Think of it like: Separate attachments folder

If your table has a field like:

field(10; "Attachment"; Media) { }

The media bytes are saved separately in Table 602, and the JSON just stores a reference ID.

Common Questions Answered

When I first started learning this, the most common question that kept asking, I’ve already dissected it thoroughly. So if you’re wondering the same, you’ll find your answers here.

Q: Should I use StartSubscriptionToDelete() or SaveRecords()?

A: Use SaveRecords() - it's more reliable! The StartSubscriptionToDelete() pattern is advanced and may not work in all environments or BC versions. The SaveRecords() pattern gives you explicit control:

// RECOMMENDED: Explicit archive-then-delete pattern

DataArchive.Create('My Archive');

OldCase.SetFilter("Completion Date", '<%1', CutoffDate);

RecRef.GetTable(OldCase);

DataArchive.SaveRecords(RecRef); // Archive first

OldCase.DeleteAll(true); // Then delete

DataArchive.Save();

This pattern:

- Works in all BC versions and environments

- Gives you full control over what gets archived

- Easy to understand and debug

- No subscription binding issues

Q: What if I only want to archive MY table, not others?

A: The SaveRecords() pattern shown above automatically does this! It only archives the specific filtered records you specify:

DataArchive.Create('Only My Table');

OldCase.SetFilter("Completion Date", '<%1', CutoffDate);

RecRef.GetTable(OldCase);

DataArchive.SaveRecords(RecRef); // Only archives filtered records

OldCase.DeleteAll(true);

DataArchive.Save();

Q: Does it slow down my deletes?

A: Yes, slightly. Each delete triggers JSON serialization. For 1000 records, expect ~1-2 seconds overhead.

Q: Can I restore archived records back to the table?

A: Not directly. You'd need to:

- Export to Excel/CSV

- Parse the JSON manually

- Re-insert records

It's a backup, not a "recycle bin"!

Q: What happens if my code crashes after SaveRecords but before Save()?

A: The cache is lost (it's in memory), but the records are NOT deleted yet (because DeleteAll comes after SaveRecords). This is why the SaveRecords pattern is safer! If you want extra safety, use error handling:

if not DataArchive.DataArchiveProviderExists() then

Error('Data Archive not available');

DataArchive.Create('Important Cleanup');

// Archive first

OldCase.SetFilter("Completion Date", '<%1', CutoffDate);

RecRef.GetTable(OldCase);

DataArchive.SaveRecords(RecRef);

// Only delete if archiving succeeded

OldCase.DeleteAll(true);

// Commit the archive

DataArchive.Save();

If there's an error between SaveRecords and Save, the records remain in your table - no data loss!

Q: Can I see the JSON without exporting?

A: Yes! Use this code:

var

DataArchiveTable: Record "Data Archive Table";

InStr: InStream;

JsonText: Text;

begin

DataArchiveTable.Get(1); // Your entry number

DataArchiveTable."Table Data (json)".CreateInStream(InStr);

InStr.ReadText(JsonText);

Message(JsonText); // See the raw JSON!

end;

Key Insights for Junior Developers

1. It's Just JSON Storage

Data Archive doesn't do anything magical. It:

- Converts records to JSON (like

format(Record, 0, 9)) - Stores JSON as BLOB in Table 601

- Provides methods to make this easy

2. The SaveRecords Pattern Is Most Reliable

Always use the explicit archive-then-delete pattern:

RecRef.GetTable(YourRecord);

DataArchive.SaveRecords(RecRef); // Archive FIRST

YourRecord.DeleteAll(true); // Delete SECOND

This gives you full control and works in all BC environments. The subscription pattern (StartSubscriptionToDelete) is advanced and may not work everywhere.

3. It's Not a Recycle Bin

Think of it as:

- Audit trail / compliance archive

- "Just in case" backup before bulk deletes

- NOT for undo/restore operations

- NOT for frequent access to old data

4. Performance Considerations

❌ This is SLOW (1000 records = 1000 function calls)

repeat

DataArchive.SaveRecord(Rec);

until Rec.Next() = 0;

✔️ This is FAST and RELIABLE (1000 records = 1 function call)

RecRef.GetTable(Rec);

DataArchive.SaveRecords(RecRef);

Rec.DeleteAll(true);

❕ This is more of an ADVANCED (may not work in all environments)

DataArchive.StartSubscriptionToDelete();

Rec.DeleteAll(true);

DataArchive.StopSubscriptionToDelete();

Recommendation: Use SaveRecords() for the best balance of performance and reliability!

5. Works Everywhere

Turns out, the secret wasn’t in the code it was in declaring the archive properly in app.json

- ✅ BC SaaS (Cloud)

- ✅ BC On-Premise

- ✅ Docker/Sandbox

- ✅ Production

- ✅ Standard tables

- ✅ Custom tables

- ✅ Extension tables

No special permissions or setup required (except having the Data Archive app installed).

Common Issues & Troubleshooting

Issue 1: "Codeunit 'Data Archive' is missing" (Compilation Error)

Symptom: Your extension won't compile, shows error on Codeunit "Data Archive"

Solution: Add the Data Archive dependency to your app.json:

"dependencies": [

{

"id": "7819d79d-feea-4f09-bbed-5bbaca4bf323",

"name": "Data Archive",

"publisher": "Microsoft",

"version": "26.0.0.0"

}

]

Get the exact App ID from Extension Management in Business Central, then republish your extension.

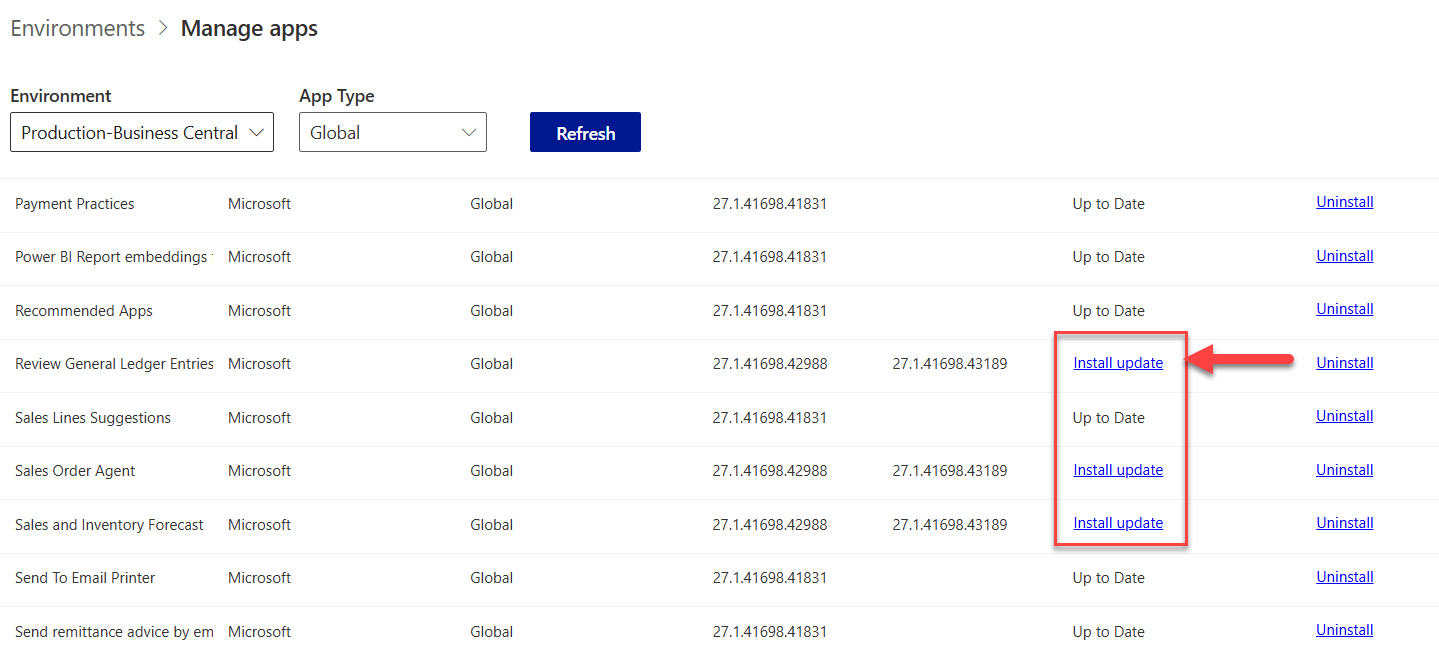

Issue 2: "No archiving app to use this feature" (Runtime Error)

Symptom: Code compiles but fails at runtime with this error

Solution:

- Install the "Data Archive" extension from Extension Management in Business Central

- Ensure it's installed in your environment

- Always check before using:

if not DataArchive.DataArchiveProviderExists() then exit;

Issue 3: Nothing Gets Archived

Symptom: No errors, but Data Archive List is empty

Solution: Make sure you called DataArchive.Save() at the end! This commits the archive to the database.

DataArchive.Create('My Archive');

DataArchive.SaveRecords(RecRef);

// Don't forget this! ↓

DataArchive.Save();

Issue 4: Missing Dependency During Installation

Symptom: "Extension cannot be installed because dependency 'Data Archive' is not available"

Solution:

- In BC SaaS: Data Archive should be available by default

- In BC On-Premise: Install the Data Archive extension from the DVD/installation media first

- In Docker: Make sure you're using a BC version 15.0 or higher

When NOT to Use Data Archive

Data Archive isn't suitable for:

- Real-time backups - Use BC's built-in backup mechanisms

- Transactional rollback - Not a replacement for proper error handling

- Frequent access to old data - Retrieving from JSON is slower than table queries

- Legal hold requirements - Export to external compliance systems instead

- Real-time undo - Use transactions instead

Summary & Key Takeaways

Data Archive = Automatic JSON Backup Before Delete

- You delete records → They're saved as JSON first

- Works on ANY table (yours or Microsoft's)

- 7 lines of code to use it

Two Main Ways to Archive:

// Way 1: Single record (manual control)

DataArchive.SaveRecord(MyRecord);

MyRecord.Delete(true);

// Way 2: Bulk records (RECOMMENDED - filter and save all at once)

RecRef.GetTable(MyRecord);

DataArchive.SaveRecords(RecRef);

MyRecord.DeleteAll(true);

// Way 3: Subscription (ADVANCED - may not work everywhere)

DataArchive.StartSubscriptionToDelete(); // Use with caution

The Tables:

- Table 600 = Archive headers (your folder names)

- Table 601 = JSON data (the actual backup)

- Table 602 = Pictures/BLOBs (attachments)

Recommended Pattern (SaveRecords):

DataArchive.Create('Description');

MyTable.SetFilter(...); // Filter records to archive

RecRef.GetTable(MyTable);

DataArchive.SaveRecords(RecRef); // Archive FIRST

MyTable.DeleteAll(true); // Delete SECOND

DataArchive.Save(); // Commit to database

Why this pattern?

- Works in all BC environments

- Full control over what gets archived

- Safer - records aren't deleted until after archiving

View Results:

- Search "Data Archive List" in BC

- Export to Excel for analysis

- JSON format means records can't be easily restored (it's a backup, not undo)

TL;DR The ONE Sentence Explanation:

"Data Archive saves a JSON copy of any record you delete, stored in Table 601, so you can review/export it later for auditing or compliance."

Quick Reference Card

┌─────────────────────────────────────────────────────────────┐

│ DATA ARCHIVE QUICK REFERENCE │

├─────────────────────────────────────────────────────────────┤

│ │

│ WHEN TO USE: │

│ • Deleting old records but need audit trail │

│ • Date compression scenarios │

│ • Compliance requirements │

│ • "Just in case" backup before bulk delete │

│ │

│ BASIC PATTERN: │

│ ┌────────────────────────────────────────────────────┐ │

│ │ DataArchive.Create('My Archive'); │ │

│ │ DataArchive.StartSubscriptionToDelete(); │ │

│ │ Table.DeleteAll(true); // Auto-captured! │ │

│ │ DataArchive.StopSubscriptionToDelete(); │ │

│ │ DataArchive.Save(); │ │

│ └────────────────────────────────────────────────────┘ │

│ │

│ KEY METHODS: │

│ • Create(Text) → Start new archive │

│ • SaveRecord(Record) → Save one record │

│ • SaveRecords(RecRef) → Save filtered set │

│ • StartSubscription... → Auto-save all deletes │

│ • StopSubscription... → Stop auto-save │

│ • Save() → Commit to database │

│ • DiscardChanges() → Cancel archive │

│ │

│ WHERE DATA GOES: │

│ Table 600 → Headers (Entry No., Description) │

│ Table 601 → JSON Data (Field No. → Value) │

│ Table 602 → Media/BLOB files │

│ │

│ VIEW ARCHIVES: │

│ Search: "Data Archive List" │

│ Export: Excel or CSV │

│ │

│ WORKS ON: │

│ - Standard BC tables │

│ - Your custom tables │

│ - Extension tables │

│ - Any table in BC (except system tables) │

│ │

└─────────────────────────────────────────────────────────────┘

Support for the Community

Found this helpful? Share it with your BC developer community! 🚀

If you've encountered issues with Data Archive, found a better pattern, or have questions about archiving custom tables, drop a comment or reach out! I'll keep this guide updated as Microsoft evolves the Data Archive extension and Business Central best practices.

♻️ Repost to support the Microsoft Dynamics 365 Community and follow Jeffrey Bulanadi for technical insights above and beyond Business Central, AL development, and scalable integration architecture.

Demo Repository

Want to see working examples? Explore the complete demo code from this guide:

- Table 50100 "Case" - Custom table with relationships

- Codeunit 50100 "Archive Old Cases" - Production-ready archive pattern

Check out the Data Archive Demo Repository on GitHub for copy-paste ready code and additional examples!

Join the Conversation

Share your thoughts, ask questions, or discuss this article with the community. All comments are moderated to ensure quality discussions.

No comments yet

Be the first to start the conversation!

0 Comments

Leave a Comment